Restoring Rabbit functionality¶

Environment

3 virtual machines and a cluster has been built. Check Cluster construction

Master node yzm1(128), slave node yzm2(129), slave node yzm3(130)

The master node is normal, but the slave node is down.

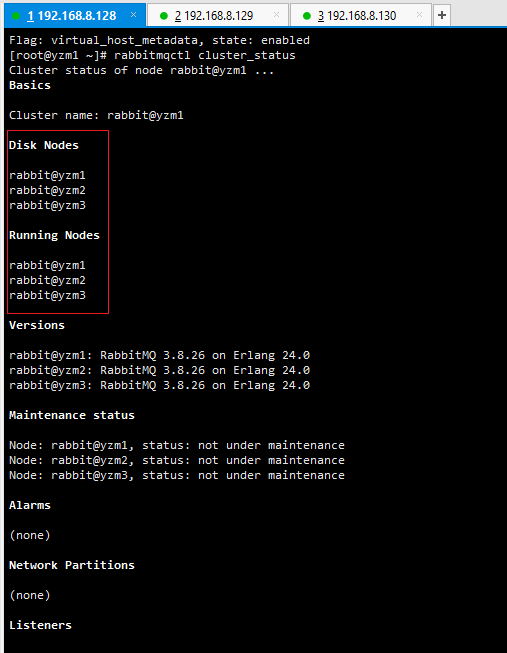

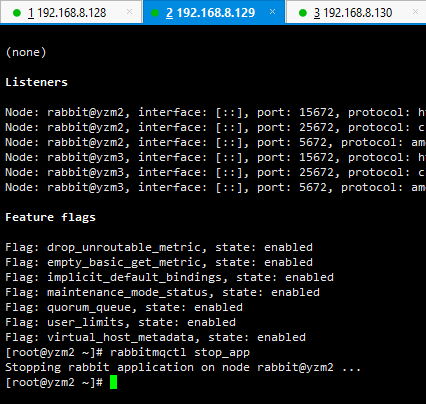

Check the cluster status, all 3 virtual machines are in normal operation:

Cluster checking¶

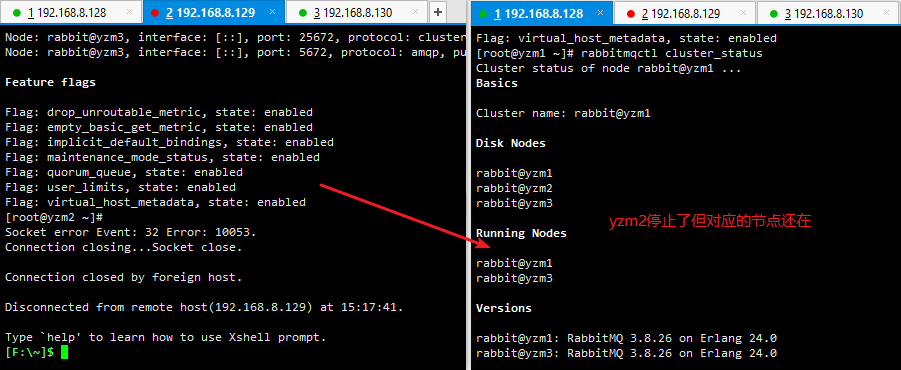

Make yzm2 down, shut down the virtual machine corresponding to yzm2:

yzm2¶

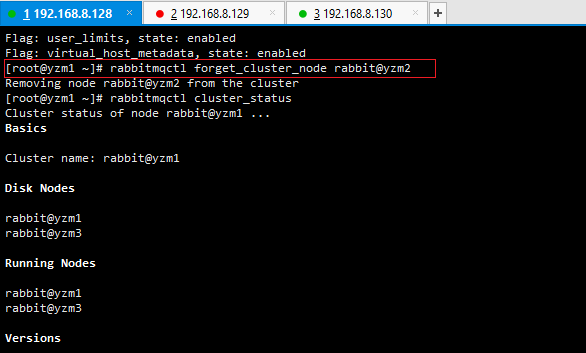

Remove the node of yzm2:

yzm2¶

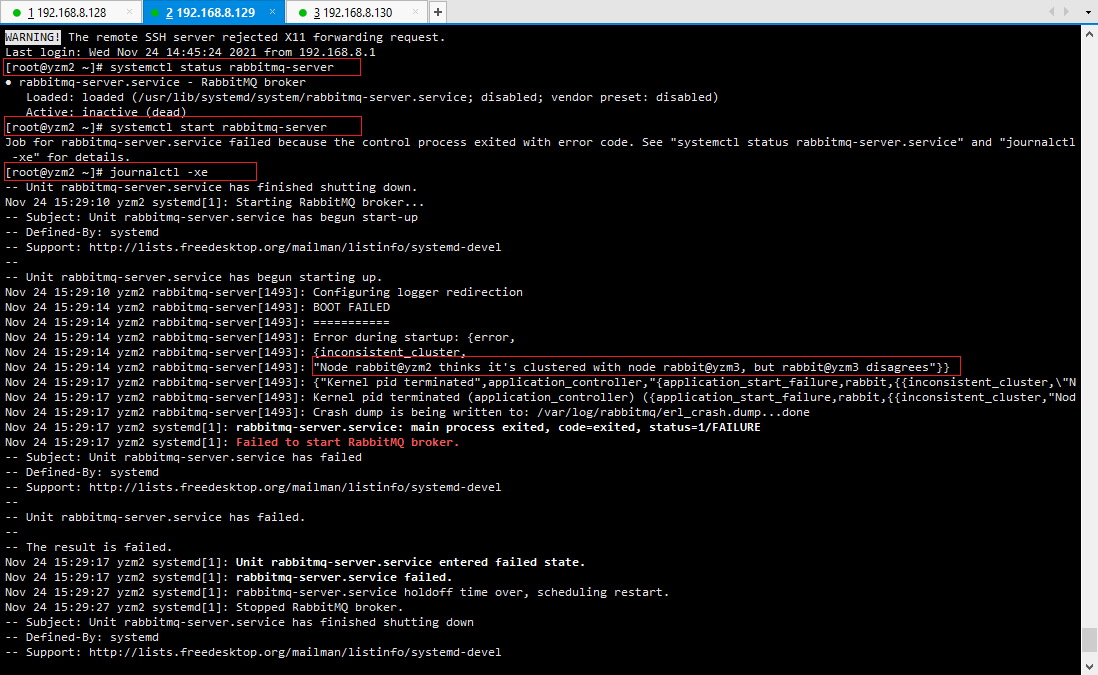

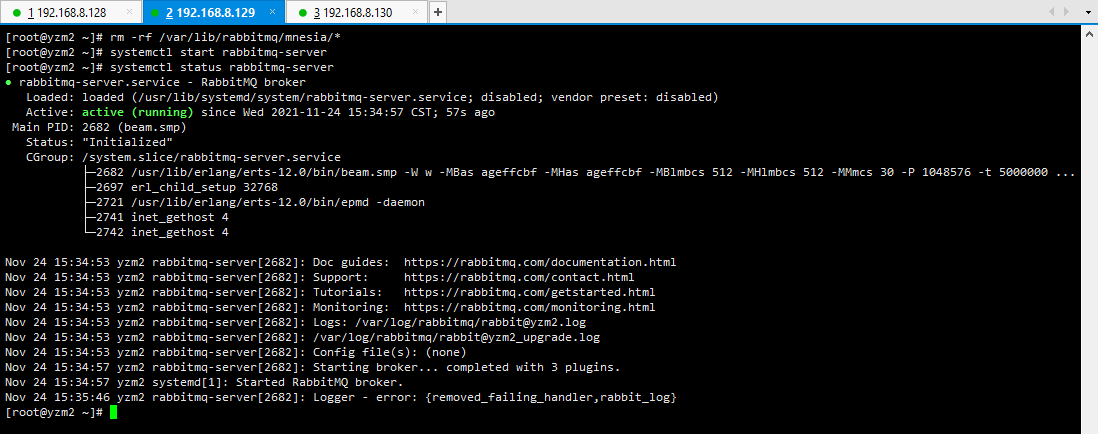

Restart the rabbitmq service of yzm2:

Restarting the rabbitmq service¶

Error message: Node rabbit@yzm2 thinks it’s clustered with node rabbit@yzm3, but rabbit@yzm3 disagrees

It means that the yzm2 downtime is not a normal exit from the cluster, and the node configuration information of the cluster is still retained, but the yzm2 node has been kicked out of the cluster

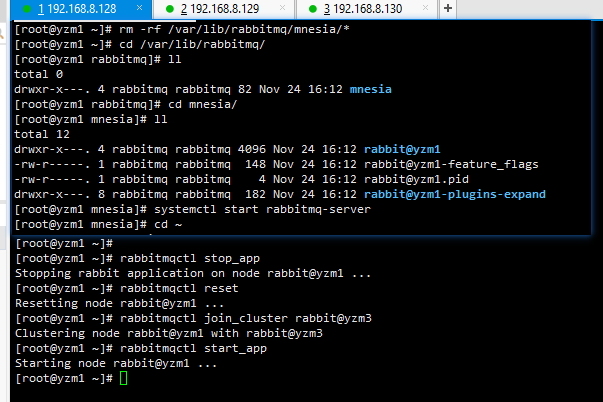

Solution Clear the reserved node configuration information:

rm -rf /var/lib/rabbitmq/mnesia/*.

Clearing information¶

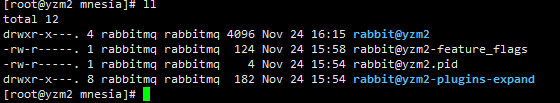

After the files in the mnesia directory are deleted, they will be regenerated after a while.

Data recovery¶

If you accidentally delete the mnesia directory, then recreate the mnesia directory, but you must ensure that the user and group of the directory are rabbitmq

Command:

chown Rabbitmq:rabbitmq /var/lib/rabbitmq/

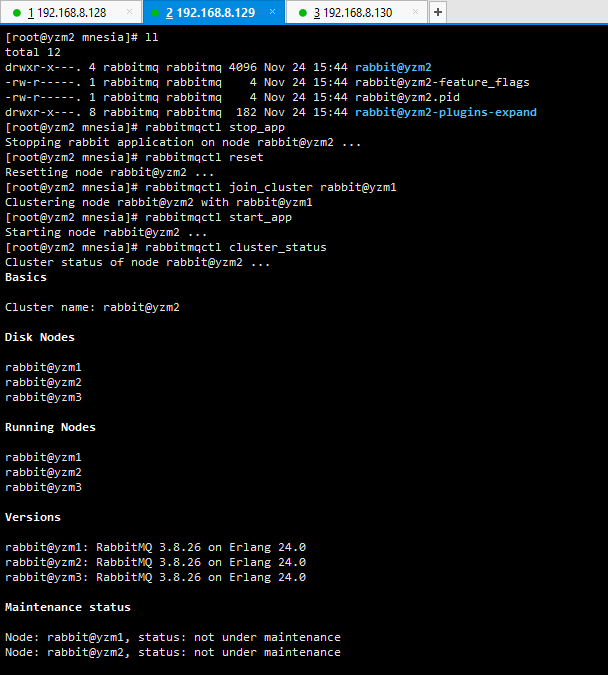

yzm2 rejoins the cluster:

yzm2 rejoining the cluster¶

The master node is down, and the slave node is normal.

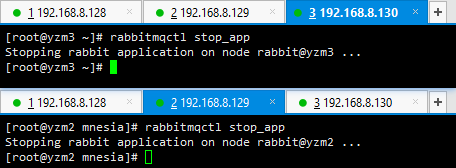

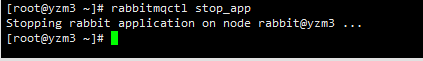

Close yzm3, yzm2

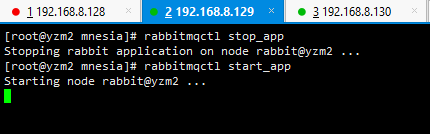

Closing yzm3, yzm2¶

yzm1 is down, turn off the virtual machine

At this time, yzm1 is the last node, and the last node is also regarded as the master node.

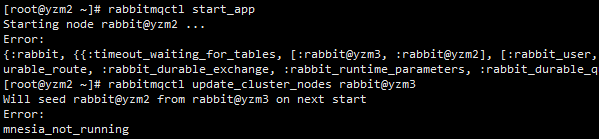

Start yzm2, it will wait for yzm1 to start, and it will fail to start after timeout:

Starting yzm2¶

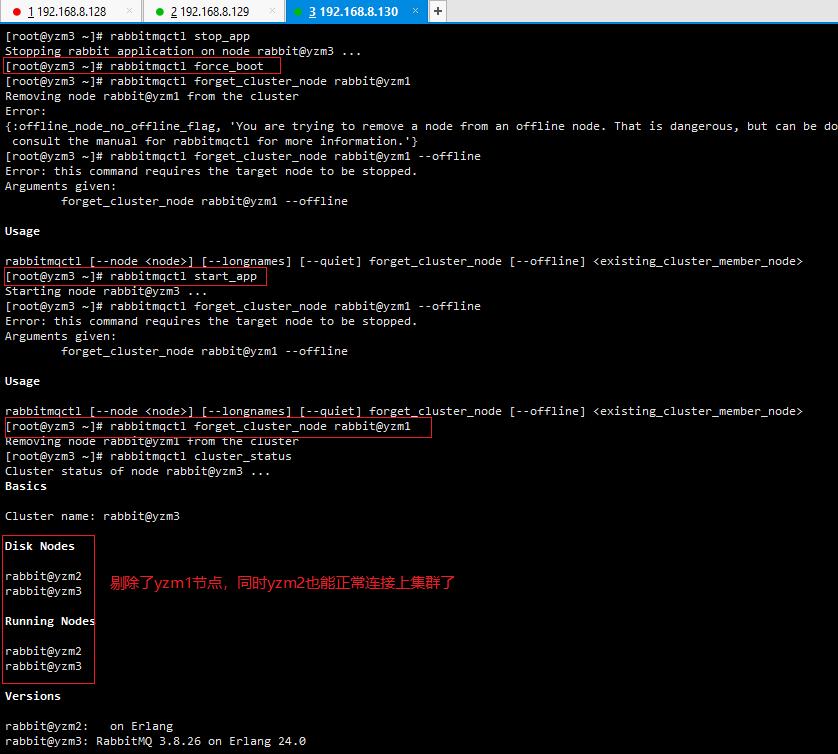

Operate yzm3 to eliminate yzm1:

Eliminating yzm1¶

Main command:

rabbitmqctl force_boot rabbitmqctl start_app rabbitmqctl forget_cluster_node rabbit@yzm1

I have used the –offline parameter many times, but it has not been used successfully, and I don’t know why

For example such usage:

rabbitmqctl forget_cluster_node --offline rabbit@yzm1 rabbitmqctl -n rabbit@yzm3 forget_cluster_node --offline rabbit@yzm1

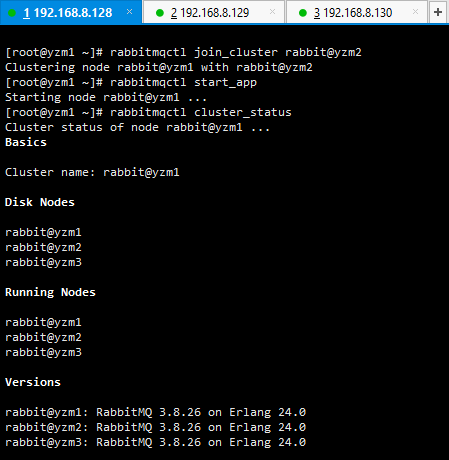

Finally restart yzm1 and join the cluster

This step is the same as the above yzm2 restart to join the cluster, you need to delete the node configuration information first cluster status:

Deleting configuration¶

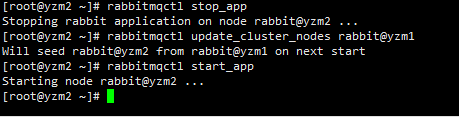

Node update before starting the node

The current cluster has nodes yzm2 and yzm3.

Nodes yzm2 и yzm3¶

Shutdown node yzm2:

Shutdowning node yzm2¶

At the same time, start yzm1 to rejoin the cluster (the node configuration information needs to be cleared before starting the rabbitmq service):

Starting yzm1¶

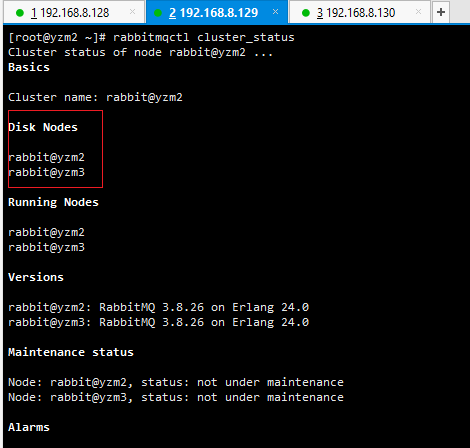

Close the yzm3 node and start the yzm2 node:

Closing yzm3¶

Starting yzm2¶

yzm2 will wait until it times out and reports an error

It is correct to consult yzm1 that is running normally in the cluster before starting:

rabbitmqctl update_cluster_nodes rabbit@yzm1

Checking yzm1¶